Insights

The prompt-box paradox

Why AI needs generative interfaces

By

Doug Cook

—

1

Dec

2025

Andrej Karpathy recently noted that interacting with ChatGPT feels a lot like “talking to an operating system through a terminal.” He’s right.

We’ve built systems capable of generating any form of information, but we continue to confine them to chat.

The prompt box presents users with infinite possibilities but few affordances. Faced with a blank field, people have little indication of a system’s capabilities or limitations. It’s a modern-day command line. Though conversational, the burden still falls on the user to enter and refine a prompt.

The power of affordances

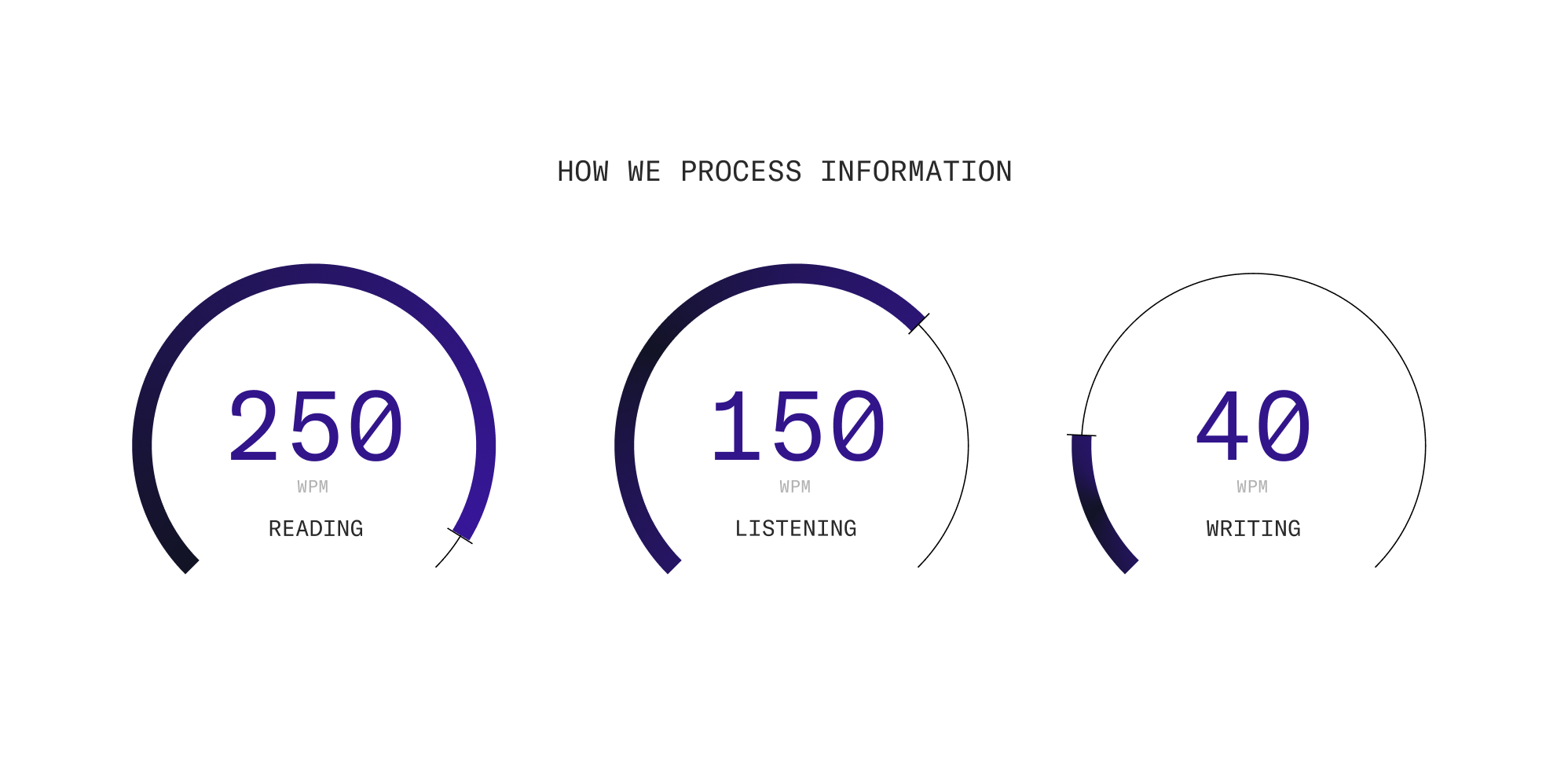

Consider the speed at which people process information. Visual pattern recognition happens near-instantaneously. Reading occurs at 250 words per minute. Listening at 150 words per minute. Writing crawls at 40. Chat interfaces trap users in the slowest mode of interaction.

It gets worse when you consider recognition versus recall. People can identify the right option from ten choices almost instantly, but recalling and writing that same option takes significantly longer.

How did we get here?

The industry’s convergence on chat interfaces isn’t completely surprising. The first LLMs were genuinely conversational. But then something changed. Models became multimodal. They learned to generate structured data, manipulate code, create visualizations, and orchestrate complex workflows. The technology evolved, but our interfaces didn’t.

We’ve essentially taken the most powerful interface generation technology ever created and constrained it to chat. So much so, an entire cottage industry has sprung up teaching people how to prompt. Courses, template libraries, and prompt guides now exist solely to help people communicate with systems that should understand them already.

Interfaces that map to intent

Graphical user interfaces democratized computing by making capabilities visible and reducing cognitive load. We’re at a similar inflection point with AI. What’s needed isn’t better chat interfaces but adaptive systems that generate the right interaction pattern for a given context.

Imagine trying to understand your business performance. Instead of refining prompts, an interface materializes around your intent. It pulls in the relevant data, generates visualizations that respond to your questions, and offers controls that evolve as your understanding deepens. The system remembers your goals across sessions, reshaping itself automatically. When the task is done, the interface dissolves, leaving only the insights you created.

This isn’t speculative. LLMs can do far more than generate text and images. They’re universal interface generators. The same model that can interpret complex datasets can generate the visualization tools needed to explore them.

Semantic primitives

Generative UI doesn’t require new infrastructure. It can be built on the web we have today. But as these systems mature, we’ll want a new set of primitives.

Web technologies give us structural primitives for layout and presentation: <div>, <span>, <button>. We add meaning through code. Future platforms will need semantic primitives: elements that encode meaning and behavior from the start.

Context primitives that interpret intent. Interfaces that learn your preferences automatically.

Temporal primitives that preserve continuity across sessions. Interfaces that remember your goals and evolve with them.

Inference primitives that anticipate the next step. Elements that adapt in real time.

Collaborative primitives where people and AI share space. Workflows where humans and AI contributions interact fluidly.

These primitives would shift interfaces from structure to semantics.

The challenge of latent capabilities

This creates new design challenges. When interfaces are generated contextually, capabilities become latent. This improves focus, but risks making features undiscoverable.

Traditional UIs reveal features through navigation. Users can browse menus, hover over buttons, and explore toolbars to understand what’s possible. In generative systems, there are no menus to browse.

Generative UI demands new approaches to progressive disclosure. The interface must hint at what’s possible without overwhelming users or making them feel like they’re operating a black box. Without this, it becomes just as opaque as the prompt box it replaces.

What comes next

The most sophisticated AI products are already blending conversational elements with visual, interactive tools, making it easy to switch between dialogue and direct manipulation. Chat doesn’t need to be a universal interface. It’s one modality among many.

Just as graphical interfaces replaced commands with affordances, generative UI will replace prompts with adaptive interfaces. Not discrete applications with persistent menus and toolbars, but contextual controls that render on demand.

The next interface revolution won’t be designed. It will be generated.

Got an idea or something to share? Subscribe to our newsletter and follow the conversation on LinkedIn!

Doug Cook

Doug is the founder of thirteen23. When he’s not providing strategic creative leadership on our engagements, he can be found practicing the time-honored art of getting out of the way.